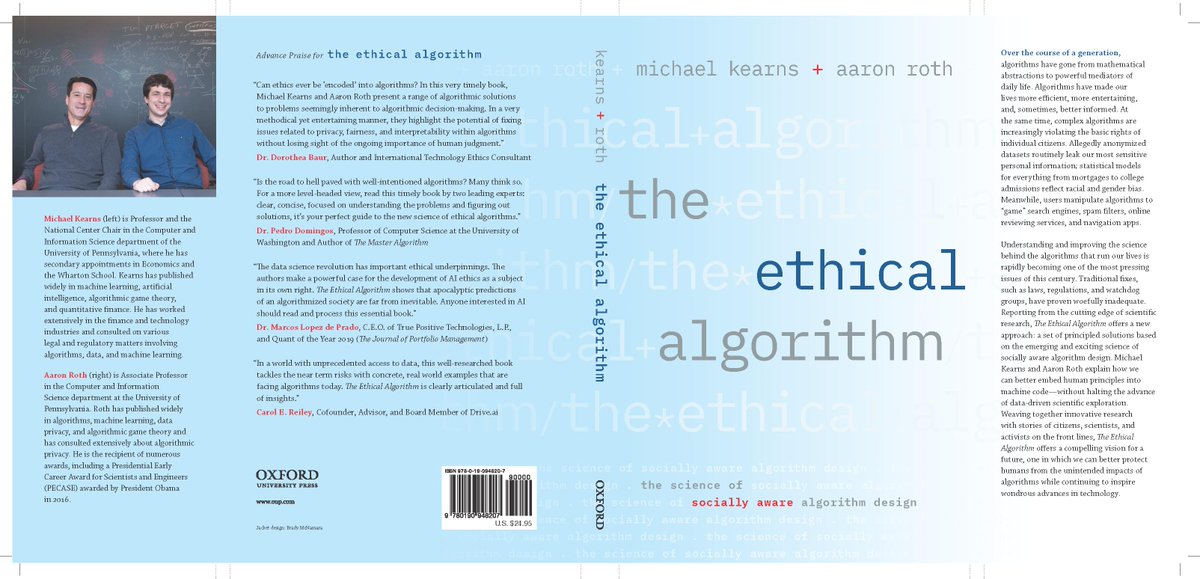

Book Review of ‘The Ethical Algorithm: the Science of Socially Aware Algorithm Design’ by Michael Kearns and Aaron Roth

I am reading ‘The Ethical Algorithm: the Science of Socially Aware Algorithm Design’ by Michael Kearns and Aaron Roth, and it has reminded me why I like computer science.

Computer science is all about carefully specifying problems and what acceptable solutions would look like. This means pinning down, beginning with precise language and then perhaps moving to maths, what exactly an algorithm should do and what properties its output should have. As any computer scientist can tell you, designing algorithms usually involves trade-offs; there might be many solutions to your problem and the “best” solution will change depending on your point of view. It’s not that us computer scientists can’t accept shades of grey. We can. We’ll insist on quantifying the shades of grey though, and then we’ll reason about them for you in a rigorous way. We might come back to you to ask you to make some hard choices: do you want your algorithm to be accurate or fair? How accurate? How fair? Or even, what sort of fairness do you want (coz you can’t have it all)?

This is very useful because it enables us to move past hand-wavey calls for algorithm designers to become more ethical, or suggestions that algorithms should be regulated in some vague way. Armed with concrete methods for how we can design algorithms to meet various social needs, we can put in place systems which enact a society’s chosen values (assuming that society can clearly articulate them and they aren’t inherently contradictory). The advances in theoretical computer science explained in this book can move us forward to the point that every computer scientist or data scientist can be taught how to routinely build ethical issues (such as privacy and fairness) into their algorithms, given that the stakeholders have specified the particular ethical constraints which are important.

Machine learning algorithms are trained on data sets of examples with the purpose of working out a way to produce the “best” possible solution on a set of new examples in the future. Common tasks for machine learners would be categorising images (e.g. does this picture contain a giraffe?), recommending what items a shopper might like to buy next, or – with higher stakes – whether a candidate should be given a job interview. Machine learners never give you anything for free – if you want it to give you an accurate answer, don’t assume its output will also be ethical (e.g. unbiased) unless you spell that out very clearly. In short, defining “best” might take some work.

Algorithms for privacy

The first shocker in the chapter on privacy is that “anonymised data isn’t” – that is, there is a real danger that individuals can be identified by comparing several datasets, even if each dataset has been anonymised. The classic example of this is the Netflix competition which released an anonymised dataset of users’ film rental histories to enable teams to develop an improved recommendation system (e.g. if you like ‘Harry Potter’, why not try ‘The Golden Compass’?). Some enterprising privacy researchers worked out that even though the names were taken out of the Netflix data, they could infer the identify of a Netflix user relatively easily if they also had completed a review on IMDB using their own name. Oops.

In general, it is hard to deanonymize small datasets or those which might be disclosive when linked with other data sets. The trade-off here is between protecting the privacy of an individual against the disclosure of sensitive information while also enabling data sharing for scientific purposes. The human race will benefit if I contribute genomic information to a research study, but I don’t want my insurance company to infer anything about me as an individual from the resulting public dataset in case they use it to increase my insurance payments.

Happily, there is an algorithmic solution for this called ‘differential privacy’. It’s the idea that “nothing about an individual should be learnable from a dataset that cannot be learned from the same dataset but with that individual’s data removed” (p36). Imagine you’re conducting a poll about an embarrassing topic (let’s say you want to establish the prevalence of toenail fungus in the population). The NSA are taking a sinister interest in fungus, and will subpoena your research data, compelling you to turn over individual records of people in need of what they euphemistically call “fungicide treatment”. You don’t want that (nobody wants that) so you decide the best approach is as follows. When you call participants to ask them about their toenails, you give them these instructions: “flip a coin and don’t tell me how it landed. If it is heads, tell me honestly whether you have a toe-nail fungus. But if the coin landed tails, then tell me a random answer by doing the following. Flip the coin again and say ‘Yes, I am full of fungus’ if it comes up heads and ‘No, I am fungus free if it is tails’”. This is fiendishly clever because now if the NSA comes knocking on someone’s door, they can always deny that there is anything untoward in their socks, it’s just the way the second coin landed. The NSA would never know they difference unless they are willing to remove citizen’s footwear on a whim. And yet, you as a researcher can still infer what you need to about fungus frequency because although there are errors in the dataset, you systematically introduced them, so you can work backwards to factor them out too.

It turns out that you can glue together a series of algorithms which guarantee differential privacy and the result will also be differentially private too. This technique has real world applications which are used by Google and the US Census Bureau.

How to make algorithms fair

Machine learning classifiers have gained notoriety for being unfair on the grounds that their output can be sexist and racist. This is not because they have been designed by chauvinist computer scientists who long for the 1940s, but because they are trained on real world data sets. Algorithms which are trained on datasets of photos which don’t have many examples of black faces will have poor accuracy when attempting to classify new images of black people. Algorithms which are trained on text written by humans are sadly more likely to associate men with the word “genius” and women with the word “earring”. The human world is riddled with bias, so our machine learners will reflect that. Due to the mathematical properties of the algorithms they use, they may also amplify bias against minority groups simply because the groups are smaller and influence decisions less; there are more samples of the majority group. Increasing fairness for one group may reduce accuracy for other groups.

Beyond the bias in the training set, I was fascinated to read about the different reasonable definitions of fairness and how there are mathematical proofs that cannot be achieved concurrently. The book uses an example of a bank which uses machine learning to decide whether to grant a loan to fictional people in a society of two racial groups: Circles and Squares. One definition of fairness would be that the proportion of false positives and false negatives for Circle and Square should be the same: the same proportion of Circles should be approved loans when they actually can’t pay them back as their Square counterparts, and also the same proportion of Circles and Squares should have their loans rejected even if they really could have repaid them. However, another definition of fairness is called equality of positive predictive value: among the people who the algorithm predicts will repay the loan, the repayment rates among Circles and Squares should be the same. It turns out that it is not possible to have equality of false negatives and positives at the same time as equality of positive predictive value. There are also some interesting results on intersectionality. It is possible to be fair to multiple groups (Reds, Blues, Circles, Squares) but it comes at the expense of being unfair to individuals in the intersection of those groups (such as Red Circles, Blue Squares).

Who should read this book?

The book is well written, using small examples to build up a series of logical steps which often lead to surprising conclusions. Most of the explanations are just plain English, without a lot of maths notation. It would probably be helpful for readers to have a basic understanding of machine learning and a background understanding of algorithm design. I can attest to the fact that a dimly remembered computer science degree from the 1990s will do the job but I wouldn’t recommend it for school pupils.